If you’re looking to boost AI inference performance in 2025, I recommend exploring top PCIe accelerator cards like the Coral Mini PCIe, Waveshare Hailo-8, and Youyeetoo’s dual Edge TPU modules. These cards offer high TOPS, low latency, and efficient power use, fitting various system sizes. Compatibility, thermal management, and scalability are key. Keep exploring as I share more about these leading options and how they can fit your specific needs.

Key Takeaways

- Top PCIe accelerator options like Hailo-8, Coral Mini PCIe, and Youyeetoo deliver high TOPS for efficient AI inference.

- Compatibility with PCIe Gen3 x16 slots ensures high-speed data transfer and easy integration with existing systems.

- Energy-efficient models such as Coral Mini PCIe offer low power consumption, ideal for edge and embedded AI deployments.

- Advanced cooling solutions are essential for high-performance cards like Hailo-8 to maintain stability under intensive workloads.

- Scalability features enable deploying multiple modules, boosting inference capacity for demanding AI applications in 2025.

PCIe Gen3 AI Accelerator Card with Google Coral Edge TPU

If you’re looking for a compact yet powerful PCIe accelerator for edge AI inference, the PCIe Gen3 AI Accelerator Card with Google Coral Edge TPU is an excellent choice. It supports up to eight Google Edge TPU M.2 modules, delivering robust AI processing capabilities. Compatible with standard PCIe Gen3 x16 slots, it’s easy to install and offers stable performance under heavy loads. Designed for quick deployment, it supports TensorFlow Lite models, simplifying model compilation and deployment. Its thermal design includes a copper heatsink and twin turbofans for efficient cooling. At just over a pound and compact dimensions, it fits easily into various systems for reliable, high-performance edge AI inference.

Best For: edge AI developers and systems integrators seeking a compact, high-performance PCIe accelerator for deploying machine learning models using Google Coral Edge TPU.

Pros:

- Supports up to 8 Google Edge TPU M.2 modules for scalable AI processing

- Compatible with standard PCIe Gen3 x16 slots for straightforward installation

- Efficient thermal design with copper heatsink and twin turbofans ensures reliable operation

Cons:

- Customer rating is 2.8 out of 5 stars based on limited reviews

- Price and availability vary across retailers, potentially affecting affordability

- Not discontinued but requires compatible hardware and technical expertise for setup

PCIe Gen3 AI Accelerator PCIe Card with Google Coral Edge TPU

The PCIe Gen3 AI Accelerator PCIe Card with Google Coral Edge TPU stands out as an excellent choice for edge AI inference tasks that demand scalable and high-performance acceleration. Designed by SmartFly Tech, it supports up to 16 Google Edge TPU M.2 modules, providing significant processing power. Compatible with PCIe Gen3 x16 slots, it offers stable operation under heavy loads. Its thermal design includes a copper heatsink and twin turbofans for reliable cooling. Ideal for deploying pre-trained models via TensorFlow Lite, this plug-and-play card delivers easy installation and robust performance, making it perfect for scalable edge AI applications. It remains an actively available product, ensuring ongoing support.

Best For: edge AI developers and engineers seeking scalable, high-performance inference acceleration with easy installation.

Pros:

- Supports up to 16 Google Edge TPU M.2 modules for extensive processing power

- Compatible with PCIe Gen3 x16 slots, ensuring stable high-load operation

- Equipped with a high-quality copper heatsink and twin turbofans for efficient thermal management

Cons:

- Bulkier dimensions may require additional space in some setups

- Price and availability can vary across different platforms and regions

- Requires compatible hardware and technical expertise for optimal deployment

Youyeetoo AI Accelerator Card (CRL-G18U-P3DF)

The Youyeetoo AI Accelerator Card (CRL-G18U-P3DF) stands out for its ability to support up to 8 Google Coral Edge TPU M.2 modules, delivering a combined performance of 32 TOPS. Designed with a PCIe Gen3 x16 interface, it ensures high-speed data transfer suitable for edge AI applications. Its ASIC technology enables multiple AI analytics to run concurrently with low latency, making it ideal for real-time tasks like surveillance and automation. With power consumption between 36-52 watts and compact dimensions, this card offers a scalable, efficient solution for boosting inference performance in demanding AI environments.

Best For: AI developers and edge computing professionals seeking high-performance, low-latency inference acceleration for real-time applications like surveillance, automation, and industrial systems.

Pros:

- Supports up to 8 Google Coral Edge TPU M.2 modules for scalable performance

- High-speed PCIe Gen3 x16 interface ensures fast data transfer

- Low power consumption (36-52 watts) makes it energy efficient

Cons:

- Limited to specific Edge TPU modules, which may restrict hardware flexibility

- Relatively large size may pose installation challenges in compact setups

- Requires compatible infrastructure and expertise for optimal integration

Coral Mini PCIe Accelerator (G650-04528-01)

For developers seeking a compact, power-efficient AI inference solution, the Coral Mini PCIe Accelerator (G650-04528-01) stands out with its edge TPU coprocessor capable of delivering up to 4 TOPS. Its small size—just over an inch in each dimension—makes it ideal for tight spaces, while consuming only 0.5 watts per TOPS for efficient performance. It supports TensorFlow Lite models and runs MobileNet v2 at 400 FPS, enabling high-speed image classification. Compatible with Debian Linux and Windows 10, it’s perfect for edge AI deployments where power, size, and performance matter. It integrates seamlessly into existing systems for fast, accurate inference.

Best For: developers and engineers seeking a compact, power-efficient AI inference accelerator for edge computing applications.

Pros:

- High-performance edge TPU capable of 4 TOPS for fast ML inferencing

- Ultra-compact size (just over 1 inch in each dimension) ideal for space-constrained setups

- Low power consumption at 0.5 watts per TOPS, enhancing energy efficiency and sustainability

Cons:

- Limited to specific operating systems like Debian Linux and Windows 10, potentially restricting some environments

- Requires compatible card slot and system integration, which may involve additional hardware setup

- Supports only certain models and frameworks (e.g., TensorFlow Lite), possibly limiting flexibility for advanced or custom ML workflows

If you’re seeking a high-performance AI accelerator that seamlessly integrates with Raspberry Pi 5, the Waveshare Hailo-8 M.2 module stands out thanks to its powerful 26 TOPS Hailo-8 processor. Designed for edge AI inferencing, it supports frameworks like TensorFlow, ONNX, and Pytorch, enabling real-time, low-latency processing. The module is compact, supports multi-streams and models, and operates efficiently at around 2.5W. Although it arrives without cooling solutions and requires an NVMe slot, it offers impressive inference speeds, making it ideal for surveillance, robotics, and other low-latency AI applications. Compatibility with Linux and Windows broadens its versatility.

Best For: edge AI developers and enthusiasts seeking high-performance, low-latency inference capabilities for robotics, surveillance, and embedded AI projects using Raspberry Pi 5.

Pros:

- Powerful 26 TOPS Hailo-8 processor enabling rapid AI inference.

- Supports multiple frameworks like TensorFlow, ONNX, and Pytorch for versatile deployment.

- Compact design with low power consumption (~2.5W), ideal for embedded applications.

Cons:

- Arrives without cooling solutions, requiring users to find compatible heatsinks or fans.

- Installation requires an NVMe M.2 slot, limiting compatibility with some Raspberry Pi 5 setups.

- Uncertain compatibility with USB-C adapters, potentially complicating external connections.

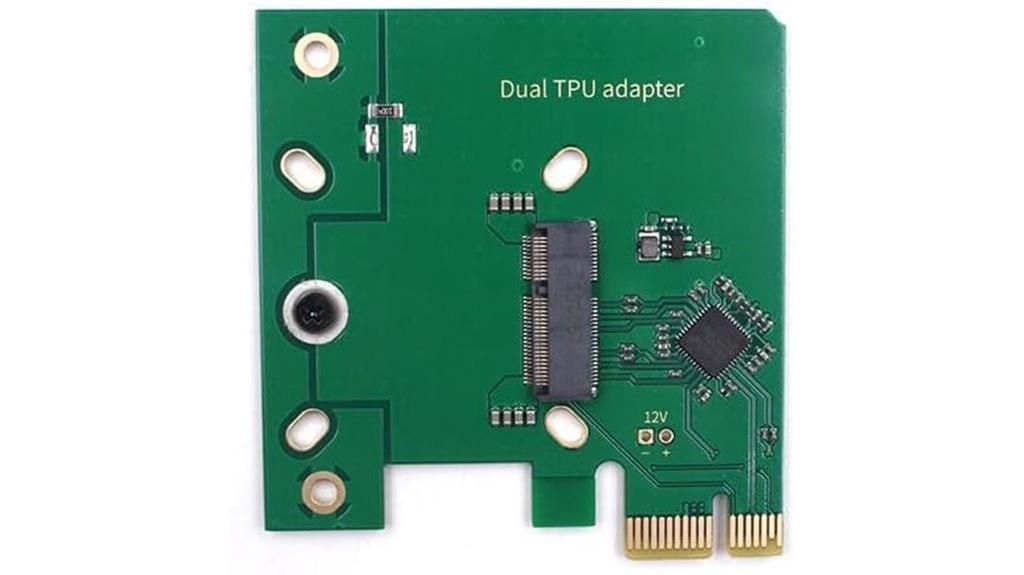

Dual Edge TPU PCIe x1 Low Profile Adapter

The Dual Edge TPU PCIe x1 Low Profile Adapter stands out for its compact design and powerful AI acceleration, making it ideal for space-constrained systems. It supports Coral m.2 Accelerators with dual Edge TPU modules, delivering 8 TOPS (4 TOPS per TPU). Compatible with Linux and Windows, it fits into PCIe x1 Gen2 slots, ensuring reliable data transfer and power. Its low-profile form factor (2.67 x 2.56 x 0.1 inches) is perfect for tight spaces. Built with a professional-grade PCB, it guarantees efficient heat dissipation and signal integrity, maximizing AI inference performance in industrial automation, edge-AI, and machine learning applications.

Best For: enthusiasts and professionals seeking compact, high-performance AI acceleration solutions for space-constrained industrial automation, edge-AI, and machine learning applications.

Pros:

- Compact low-profile design fits into tight spaces

- Supports dual Edge TPU modules delivering up to 8 TOPS for powerful AI inference

- Compatible with both Linux and Windows systems for versatile deployment

Cons:

- Not compatible with Raspberry Pi Compute Module 4

- Requires PCIe x1 Gen2 slots, limiting use with older systems

- Includes only the adapter and mounting screw; Coral m.2 Accelerator must be purchased separately

PC Diagnostic 4-Digit Card for Motherboard Testing

The PC Diagnostic 4-Digit Card stands out as an essential tool for technicians and DIY enthusiasts who need quick and accurate motherboard troubleshooting. It displays POST status codes via a dual, dot matrix hexadecimal read-out, showing current and previous errors. Compatible with PCI and ISA slots, it works with all motherboards supporting these interfaces and supports various Windows versions. The card diagnoses motherboard failures without CPU or extra parts, aiding in crash detection and system testing. Its compact size and included DuPont wires make it simple to use, though proper placement is fundamental. Overall, it’s a practical device for identifying POST issues and motherboard faults efficiently.

Best For: DIY enthusiasts and technicians seeking a reliable tool for quick motherboard diagnostics and POST code analysis.

Pros:

- Supports multiple motherboard interfaces (PCI and ISA) and Windows versions, ensuring wide compatibility.

- Displays current and previous POST error codes clearly, aiding in efficient troubleshooting.

- Compact design with included DuPont wires makes installation and testing straightforward.

Cons:

- Can be damaged or cause system issues if used improperly or with faulty cards, posing potential safety risks.

- Some users report inconsistent code readings or device malfunction after initial use.

- Troubleshooting may be complicated by unclear instructions or compatibility limitations with certain motherboards.

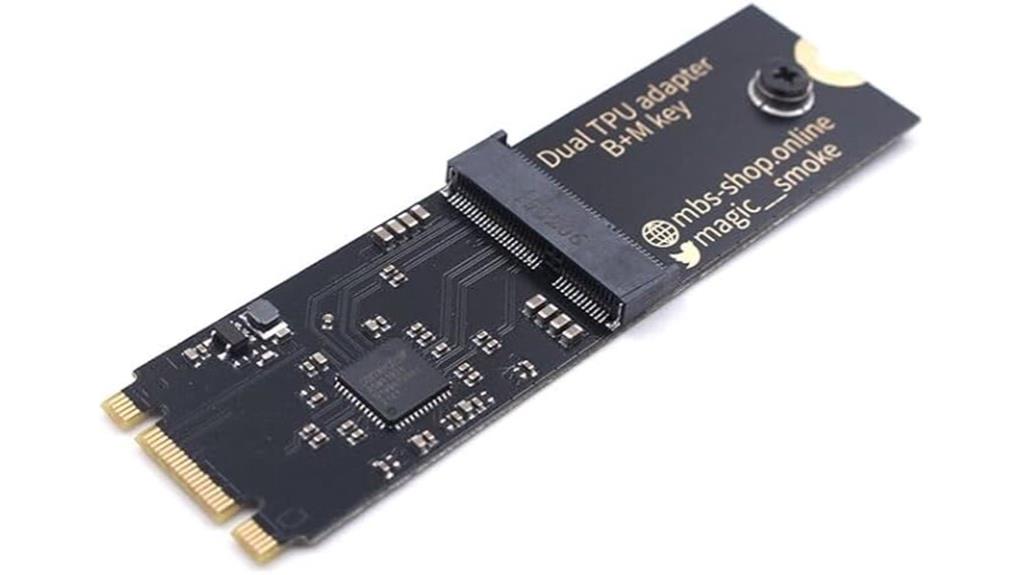

Coral Dual Edge TPU Adapter for Coral m.2 Accelerator

For those building compact AI inference systems, the Coral Dual Edge TPU Adapter offers an excellent solution by enabling dual Edge TPU modules in a single M.2 slot. It’s compatible with standard M.2 2280 B-key or M-key PCIe slots and includes a vibration-resistant stainless steel screw for secure mounting. Keep in mind, it’s designed specifically for Coral M.2 Accelerators with Dual Edge TPU modules—no support for SATA M.2, Raspberry Pi Compute Module 4, or external USB enclosures. With PCIe Gen2 x1 lanes, it delivers efficient data transfer. This adapter is ideal for expanding AI inference capacity in small, optimized systems.

Best For: developers and engineers building compact AI inference systems seeking to expand their processing capacity with dual Edge TPU modules in a single M.2 slot.

Pros:

- Compatible with standard M.2 2280 B-key or M-key PCIe slots for versatile installation.

- Includes vibration-resistant stainless steel screw for secure mounting.

- Supports dual Edge TPU modules to enhance AI inference performance.

Cons:

- Not compatible with SATA M.2, Raspberry Pi Compute Module 4, or external USB enclosures.

- Requires Coral M.2 Accelerator with Dual Edge TPU modules (not included).

- Limited to PCIe Gen2 x1 lanes, which may restrict data transfer speeds for some applications.

New V8 PCIe USB Post Test Card for PC and Laptop Diagnostics

If you’re seeking a versatile diagnostic tool that works seamlessly across PCs, laptops, and even Macs, the New V8 PCIe USB Post Test Card stands out as an excellent choice. This all-in-one motherboard diagnostic kit supports multiple devices, including desktops, servers, mini PCs, and Android tablets, with various USB ports like Type-C and micro USB. It allows you to test components and pinpoint faults without disassembly, using an Android app that displays results on-screen. Although the app is outdated, the device’s straightforward design, portability, and compatibility make it ideal for technicians, DIYers, and professionals needing quick, reliable diagnostics across a range of devices.

Best For: technicians, DIY enthusiasts, and IT professionals who need a versatile and reliable motherboard diagnostic tool compatible with a wide range of devices including PCs, laptops, and Macs.

Pros:

- Supports multiple device types and USB ports, including Type-C and micro USB, ensuring broad compatibility.

- Enables fault detection and component testing without disassembly, saving time and reducing dust.

- Portable, compact design with clear diagnostics via an Android app, making it user-friendly for various skill levels.

Cons:

- The diagnostic app is outdated and may not be compatible with newer smartphones, limiting usability.

- Requires sideloading the app from unofficial sources, raising security concerns.

- Instruction manuals and app interface may be unclear or difficult to follow for some users.

Comimark Mini 3-in-1 PCIe PCI-E LPC Tester Diagnostic Post Test Card

Designed specifically for laptop technicians and DIY enthusiasts, the Comimark Mini 3-in-1 PCIe PCI-E LPC Tester Diagnostic Post Test Card offers a compact and straightforward solution for diagnosing hardware issues. Its lightweight design makes it easy to carry and use for quick troubleshooting of non-booting laptops, especially those with mini-PCIe slots. While it provides detailed post-test information for PCI, PCI-E, and LPC, it’s important to verify compatibility beforehand, as it doesn’t fit standard desktop PCIe slots. Users should also handle it carefully to avoid losing the small device or encountering quality issues. Overall, it’s a handy tool for targeted hardware diagnostics on compatible laptops.

Best For: DIY laptop enthusiasts and technicians needing a portable, easy-to-use diagnostic tool for troubleshooting hardware issues in laptops with mini-PCIe slots.

Pros:

- Compact and lightweight design for easy portability and quick troubleshooting.

- Provides detailed post-test information for PCI, PCI-E, and LPC components.

- Suitable for diagnosing non-booting laptops, especially those with mini-PCIe slots.

Cons:

- Not compatible with standard desktop PCIe slots or most modern laptops.

- Small size increases risk of losing the device during use.

- Some units may arrive damaged or with quality control issues, and instructions may be vague.

Edupress Reading Comprehension Practice Cards, Inference, Green Level (EP63400)

The Edupress Reading Comprehension Practice Cards, Inference, Green Level (EP63400), are ideal for educators and parents seeking an engaging, straightforward tool to build inferencing skills in middle school students. This set of 54 durable, laminated cards features leveled passages and multiple-choice questions designed to improve comprehension and inferencing abilities. With self-checking answers on the back, these cards support quick assessment and independent practice. They’re versatile for centers, tests, or take-home use, aligning with Common Core standards. While some find the level too easy for older students, they’re highly praised for helping young learners grasp reading context and enjoyment.

Best For: educators and parents seeking an engaging, straightforward tool to develop inferencing and comprehension skills in middle school students.

Pros:

- Durable, laminated cards that are suitable for repeated use and easy handling

- Self-checking answers on the back facilitate quick assessment and independent practice

- Versatile for various settings including centers, tests, and take-home activities

Cons:

- Some users find the reading level too easy for older or advanced students

- The cards may be more appropriate for younger children or special education students

- Size and level may not meet the expectations of all educators seeking more challenging materials

Factors to Consider When Choosing Pci-E Accelerator Cards for Inference

When selecting a PCIe accelerator for inference, I consider how well it fits with my system’s compatibility and processing needs. I also evaluate factors like thermal management, power consumption, and how easy it is to deploy the model. These points help make sure I choose a card that’s efficient and reliable for my workload.

Compatibility With System

Making certain compatibility between your system and a PCIe accelerator card is crucial for a smooth setup and peak performance. First, check that your motherboard’s PCIe slot matches the card’s version, such as Gen3 x16, to prevent installation issues. Make sure the card’s physical dimensions fit within your case, especially in compact or low-profile setups. Confirm your operating system supports the card’s drivers; whether you’re on Windows or Linux, driver support is essential. Also, verify that the interface matches your available motherboard slots, like PCIe x4 or x8, for proper connectivity. Finally, confirm your power supply can handle the card’s wattage and has the necessary connectors. Addressing these factors guarantees a seamless integration and maximum inference performance.

Processing Power Capacity

Choosing the right PCIe accelerator card hinges on understanding its processing power capacity, which directly impacts inference speed and efficiency. This capacity is often measured in TOPS (Tera Operations Per Second), revealing how many trillions of operations the card can perform each second. Higher TOPS ratings typically mean faster inference times and better handling of complex AI models. The number of supported AI modules or chips also plays a role, with some cards supporting up to 16 modules for scalable performance. Keep in mind, increased processing power usually means higher power consumption, so power requirements are a key consideration. Ultimately, a card with sufficient processing capacity ensures real-time performance for applications like surveillance, autonomous systems, and industrial automation.

Cooling and Thermal Design

Effective cooling and thermal management are critical factors to take into account because high-performance PCIe accelerator cards generate significant heat during operation. A well-designed thermal system, including high-quality copper heatsinks and twin turbofans, guarantees heat dissipates efficiently under heavy loads. Proper cooling prevents thermal throttling, maintaining peak inference performance. It also supports the longevity and reliability of the accelerator, especially when multiple Edge TPU modules or high-power AI chips are involved. Innovative airflow design and strategic placement of cooling components are essential for consistent thermal performance across the entire card. Overheating can cause system instability, reduce inference accuracy, and risk hardware failure. Thus, prioritizing robust thermal solutions is essential when selecting PCIe AI accelerators for demanding inference tasks.

Model Deployment Ease

Selecting a PCIe accelerator card that simplifies model deployment can make a significant difference in your inference workflow. I look for cards that support deployment frameworks like TensorFlow Lite, ONNX, or Keras, ensuring smooth integration of pre-trained models. Compatibility with my existing hardware and OS is essential for easy installation and setup. I also check if the card offers extensive software support, such as SDKs or APIs, to streamline deployment and management. Plug-and-play connectivity is a big plus, reducing complex configurations or extra driver installations. Additionally, I review the manufacturer’s documentation and community resources, which help with efficient deployment and troubleshooting. Choosing a card with these features minimizes setup time and maximizes productivity, making inference tasks more straightforward.

Power Consumption Levels

Power consumption is a crucial factor when evaluating PCIe accelerator cards for inference, especially as it directly affects system stability, energy costs, and thermal management. These cards vary widely in power usage, from as low as 0.5 watts per TOPS to over 52 watts, impacting overall efficiency. Lower power levels are ideal for edge devices and battery-powered systems, reducing cooling needs and extending operational life. However, high-performance cards with greater TOPS typically consume more power, requiring stronger power supplies and enhanced cooling solutions. To optimize energy efficiency, many manufacturers incorporate ASIC technology and advanced cooling designs. Monitoring power consumption is essential to ensure compatibility with existing power budgets and prevent overheating or system instability. Balancing power with performance is key to selecting the right accelerator.

Installation Requirements

When choosing PCIe accelerator cards for inference, making certain your system can support the hardware is key. First, verify that your motherboard has a compatible PCIe slot, like PCIe Gen3 x16 or x1, matching the card’s requirements. Check that your power supply can deliver enough wattage and has the necessary connectors to support the card’s power needs. It’s also essential to confirm that your BIOS or UEFI firmware supports PCIe expansion cards, ensuring proper detection and operation. Additionally, make certain there’s enough physical space inside your case for the card and its cooling solutions. Finally, confirm that compatible drivers and software are available, so the card functions correctly after installation. These steps help prevent compatibility issues and ensure smooth integration.

Hardware Size and Fit

Ensuring the physical size and fit of a PCIe accelerator card is essential for a smooth installation and reliable operation. First, check that the card’s dimensions match your system’s available slot space; low-profile cards, for example, measure around 2.67 x 2.56 inches. Next, confirm that the form factor aligns with your motherboard’s slot type—whether PCIe x1, x4, x8, or x16—to guarantee proper connection. Also, consider your case’s height clearance, especially in compact or full-height setups, to avoid installation issues. Don’t forget the card’s weight, which varies from lightweight mini PCIe modules to heavier multi-module cards, affecting stability. Lastly, verify that the mounting and interface design fit seamlessly with your system’s hardware configuration for maximum compatibility.

Software Support Availability

Choosing a PCIe accelerator card with strong software support is essential for smooth deployment and efficient inference. I look for compatibility with popular frameworks like TensorFlow Lite, ONNX, Keras, or PyTorch to streamline model integration. Ensuring compatibility with my operating system—whether Linux, Windows, or embedded—is crucial to avoid software conflicts. I also verify that the manufacturer provides comprehensive SDKs, APIs, and drivers to simplify development and integration. Regular software updates and active community support are vital for maintaining compatibility with new AI models and standards. Additionally, I consider if the card’s ecosystem offers pre-compiled models, AutoML tools, or deployment pipelines, which can significantly accelerate my inference workflows. Strong software support ultimately guarantees reliable, scalable, and future-proof AI deployment.

Frequently Asked Questions

How Do PCIE Accelerator Cards Impact Inference Latency?

PCIe accelerator cards considerably reduce inference latency by providing specialized hardware that processes data faster than traditional CPUs. I’ve seen firsthand how they offload intensive tasks, enabling quicker response times and smoother AI performance. By optimizing data transfer through high-speed PCIe lanes, these cards minimize bottlenecks, ensuring real-time results. If you want faster AI inference, investing in a good PCIe accelerator is definitely a smart move.

Are PCIE Accelerator Cards Compatible With All AI Frameworks?

Yes, PCIe accelerator cards are generally compatible with most AI frameworks, but it’s not guaranteed for all. I recommend checking the specific card’s compatibility list and the framework’s supported hardware. Most popular frameworks like TensorFlow, PyTorch, and ONNX support PCIe accelerators through CUDA or other APIs. Still, some setups might require additional drivers or software adjustments, so always verify compatibility before purchasing.

What Is the Optimal PCIE Slot Configuration for Inference Cards?

I recommend using PCIe x16 slots for inference cards whenever possible, as they provide maximum bandwidth for data transfer, ensuring peak performance. If your motherboard has multiple slots, stagger the cards to improve airflow and cooling. Avoid sharing lanes with other high-bandwidth devices to prevent bottlenecks. Ultimately, check your motherboard’s specifications to match the PCIe slot configuration with your inference card’s requirements for the best results.

How Do Power Requirements Vary Among Different PCIE AI Accelerators?

Imagine upgrading my AI server with a high-end PCIe accelerator—power needs can vary widely. For example, a GPU like the NVIDIA A100 typically requires around 300W, demanding robust power supplies and proper cabling. Different accelerators, from lower-end models to top-tier cards, have varying wattages, which impacts your power planning. I always check the manufacturer’s specs to confirm my setup can handle the energy demands safely and efficiently.

Can Multiple PCIE Accelerator Cards Be Used Simultaneously?

Yes, I can use multiple PCIe accelerator cards simultaneously. I just need to guarantee my motherboard supports multi-GPU setups and has enough PCIe slots. I also configure my system with proper drivers and software to manage workload distribution efficiently. Using multiple cards boosts performance, especially for demanding AI inference tasks. Just remember, power supply and cooling become more critical as you add more accelerators.

Conclusion

Imagine your AI projects soaring with blazing speed, like a rocket slicing through the sky. Choosing the right PCIe accelerator is like fueling that rocket—powerful, reliable, and tailored to your needs. With options from Coral’s Edge TPU to Waveshare’s Hailo-8, you’re equipped to push boundaries and access new possibilities. So, gear up, select wisely, and watch your AI performance take off into a future where innovation knows no limits.